How to scrape NFT prices on OpenSea

https://twitter.com/rarecandyio/status/1521548615579226114 (opens new window)

In the beginning, back when the internet was still young, it may shock you to know there were no Astro Apes or Slurp Juices in sight.

Life in this early internet was a simpler, more innocent time. Websites were plain, wholesome creations, unsullied by ‘Slurp Juice’. Writing a web-scraper was simple; a site’s data loaded instantly within its HTML.

Fast-forward to 2022 and the web is filled with $ billion ApeCoins, and complex JavaScript apps which stream in data progressively after page load.

At axiom, we don’t know how to use multiple slurp juices on a single ape... but we do know about web-scraping for price tracking.

If you need access to NFT data, but don’t have an API, you’ve come to the right place.

# Scraping OpenSea

We’re going to illustrate this by looking at OpenSea.io - the most popular NFT marketplace - but you can take all the advice here and apply this to other sites. Many of our customers scrape Poocoin.app (opens new window) or analytics dashboards using the same techniques.

# Don’t Scrape from Cloud IPs

OpenSea - and other websites protected by Cloudflare - prevent cloud IPs on AWS, Google and others from accessing their website. This is primarily for DDoS protection, but scrapers can get caught up in their net too.

As a result,you can’t use axiom's cloud product, which is hosted on AWS.

Luckily, our desktop application comes to the rescue!

# Scrape Locally, over VPN

We recommend scraping locally, and using a VPN to avoid your IP being blocked.

To do this, you can download our desktop application.

If you’re looking for VPN providers, we recommend: https://tailscale.com/ (opens new window) and https://www.expressvpn.com/ (opens new window)

# Scrape In 2 Stages - Links, then Pages

Most customers try to just scrape everything from a single page, for example on a collection page such as: https://opensea.io/collection/dava-humanoids (opens new window)

We’ve noticed the selectors for price on these pages are inconsistent - this means your scraper is quite likely to break, or have missing data.

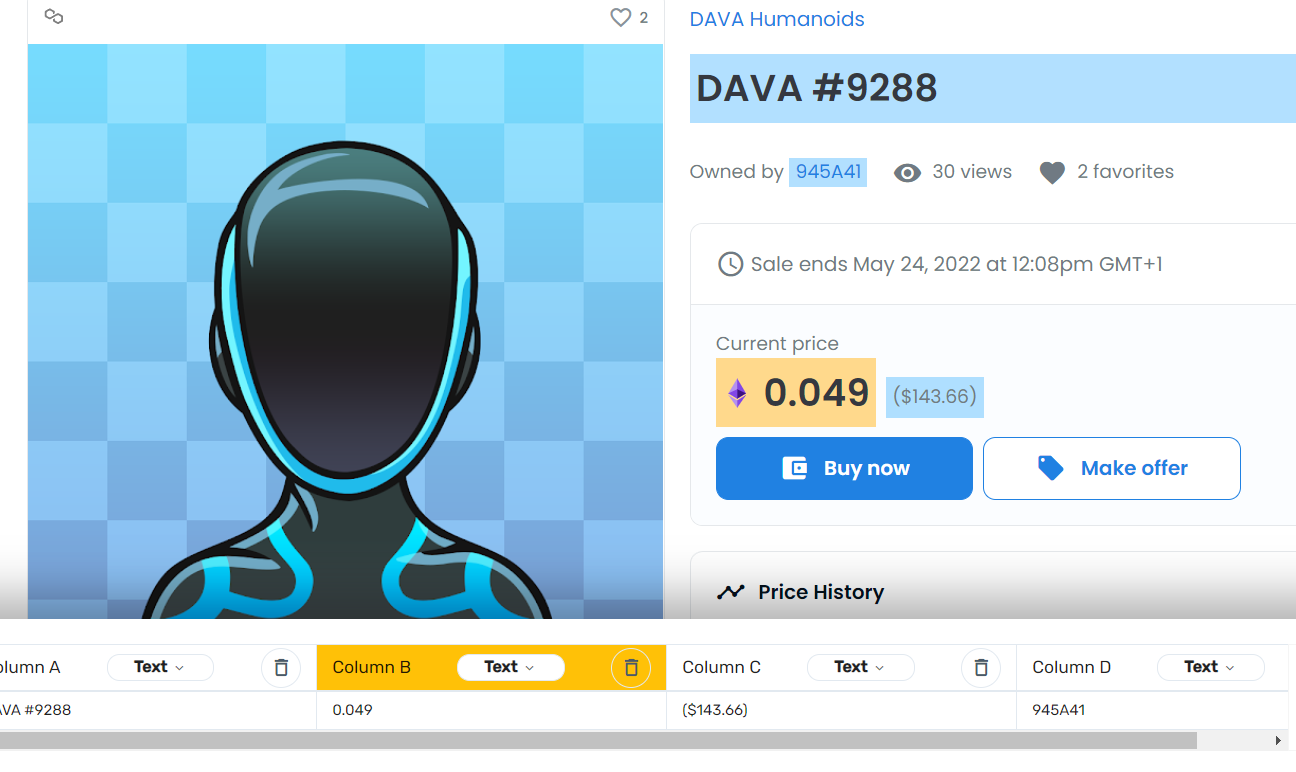

In contrast, item pages like: https://opensea.io/assets/matic/0xf81cb9bfea10d94801f3e445d3d818e72e8d1da4/18921 (opens new window) have more static selectors, and more data to hand.

For this reason, we recommend breaking the scrape into two passes: scrape links and meta data from the collection page, and use those links to scrape the remaining data on each item page. This may take slightly longer, but the solution is more robust and flexible.

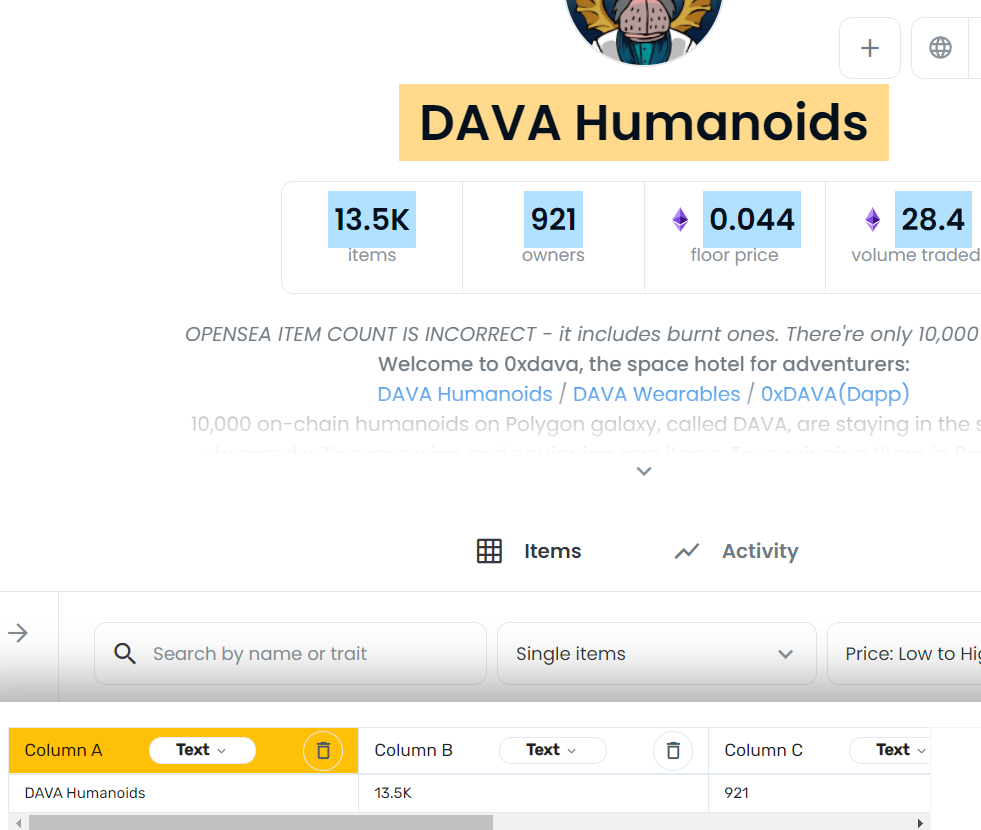

# 1. Collection Page: Scrape Floor Price & Item Links

e.g: https://opensea.io/collection/dava-humanoids (opens new window)

The most likely data you’ll want to scrape from the collection page is the the floor price - you can grab this data alongside item numbers and volume.

Alongside this, you will need to scrape the Item Links, which you’ll pass as input to Step 2.

When done, you can write this data to a Google Sheet.

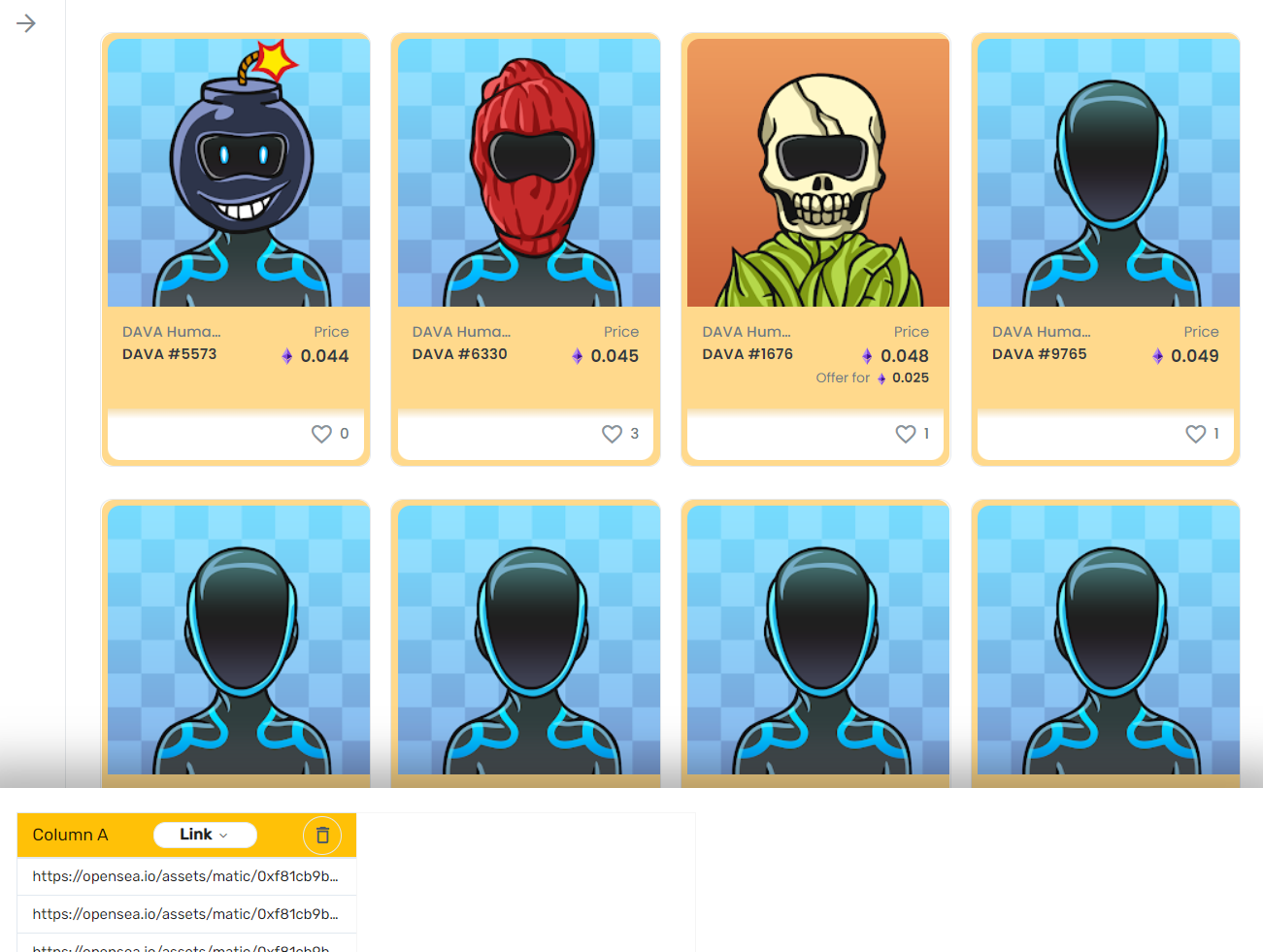

# 2. Item Links: Scrape Item Data

e.g:

https://opensea.io/assets/matic/0xf81cb9bfea10d94801f3e445d3d818e72e8d1da4/18921 (opens new window)

In step 2, you take the link data from the Google Sheet populated in Step 1, visit each link, and grab data from the visited page.

# Run Batch Scraping on Google Sheets

We see many a young scraper try to scrape 1000s of entries in one long pass, then try to write everything to a single CSV. This is a recipe for tears and disaster, like keeping no backups of your work, or putting all your eggs in one basket.

At axiom, we take utmost care of our eggs, baskets, and customers!

As a result, we strongly recommend batching your scraping process by writing to Google Sheets as you go.

This has many advantages:

- You can recover from failure. If your scrape is interrupted, you can pick up where you left off.

- Data is available progressively, not just at the end

- You can use all the powerful features of Google sheets - post-process and filter your data, then pass this back to other automations.

Here’s some articles that show you how:

https://axiom.ai/docs/tutorials/long-bot-runs

# Page-Changes & Missing Data

Only a few things in life are certain. Death, Taxes, the 2nd Law of thermodynamics, and web-scrapers breaking.

Luckily, our docs have some guidelines to mitigate scraper issues:

https://axiom.ai/blog/5-problems-webscrapers Sadly don’t have guidelines to mitigate the effects of taxes (or death).

# Schedule or Trigger Events with Zapier & Webhooks

Once your scraper is up and running, you may want to run it on a schedule.

But you can turn your scraper into an even more powerful tool by integrating it with Zapier’s APIs.

Using API + RPA Automation together opens up a world of possibilities:

- Trigger an automation when a price changes

- Get notified when new items appear from a creator

- Input your data into any system with an API

Using UI Automation + API Automation together means you can literally automate anything - that’s not an exaggeration.

Our users have surprised us by building new systems, and even whole new businesses, by automating something new!

https://axiom.a/docs/tutorials/webhooks/

https://axiom.ai/blog/rpa-api-automation

# Rinse & Repeat for Nbatopshot.com, Poocoin.app and others

We’ve focused on OpenSea.io (opens new window) here, but the principles generalise to other sites with listing pages, such as:

# Reach out if you get stuck

If you ever get stuck while building web scrapers, please don't hesitate to contact us for one-on-one help or take a look at our Documentation